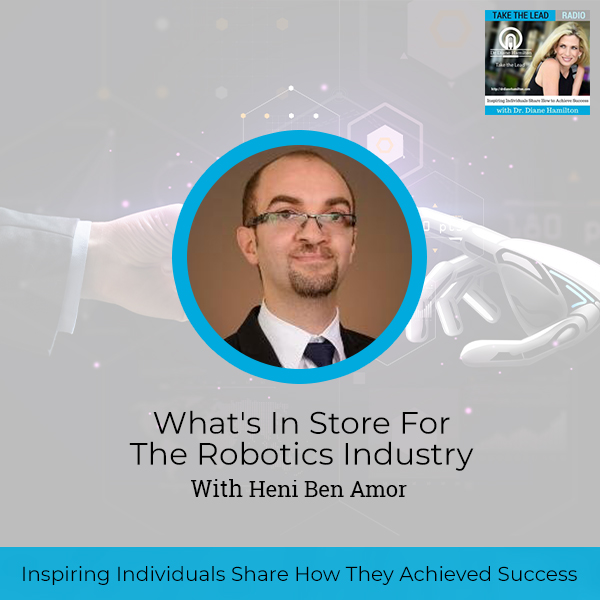

Many say that the future is now, and one of the visible signs that this statement is true is the presence of the robotics industry in our daily lives. Even though its impact on our work, hobbies, and transport is truly powerful, it is still evolving at a rapid pace. Dr. Diane Hamilton sits down with Heni Ben Amor, the Assistant Professor for Robotics at Arizona State University, to discuss how this technology continues to change our lives for the better. He explains its many uses in the leading industries, how 3D printing makes robot development way easier, and how they set boundaries for it not to go beyond its intended purpose. Heni also shares some of the most exciting robotic inventions today, from an earpiece that can help relieve stress, cameras that can send out prompts in case of emergencies, to a machine that can give hugs.

I’m so glad you joined us because we have Dr. Heni Ben Amor, Assistant Professor for Robotics at Arizona State University. He does some amazing work in the field of robotics, and I’m so excited to have him on the show. Stay tuned and we’ll talk to him after this.

—

Watch the episode here

Listen to the podcast here

What’s In Store For The Robotics Industry With Heni Ben Amor

I am here with Heni Ben Amor, Assistant Professor for Robotics at ASU, Arizona State University. He is the Director of the ASU Interactive Robotics Laboratory. It’s nice to have you here, Heni.

Thank you, Dr. Hamilton, for having me. It’s a great pleasure and honor to be on your show. I’m looking forward to some great discussions with you.

I am too. Please call me Diane. We’ll have so much fun. I know we had a chance to connect but not really yet. I work on the Board of Advisors at Radius AI and I saw you speak about some of the robotics things you were working on at ASU here in Arizona. I was fascinated by that because everybody wants to work in robotics. How did you get into this interesting career? I’d like to get a little backstory on you. I saw you’ve won all kinds of awards and I want to find out more about you.

It’s a long winding story if I want to be honest. It also shows how life evolves in interesting and strange ways. I’m German, but I grew up in the country of Tunisia for a couple of years. My parents originated from Tunisia. In Tunisia, I was watching some Japanese robot TV shows. I was fascinated by that, having these robots on screen that protect Earth from aliens. I was interested in that. I started building my little robot out of cotton and cardboard, putting some motors in there, and some small lamps and so on.

That caught the eye of my teacher, and they encouraged me to continue that. I never thought of making it into a career or anything like that. When I was at the University of Koblenz in Germany, I discovered that one of my professors was going to Japan. He was about to participate in a robotics competition. I walked up to the professor and said, “A long time ago, as a kid, I was working on robots. I’m fascinated by this. Do you need an assistant or someone to help you out?”

Indeed, he needed at that time someone to do some programming for him. He recruited me and I started working with him. I fell in love with this topic, AI and robotics, immediately. That was it. I traveled to Japan and met some amazing professors over there. Once I graduated in Germany, I went to Japan to stay longer for some studies on humanoid and android robots. Meaning, robots that have a human-like appearance, human-like skeleton. Some of the robots we worked on were so human-like that for the first couple of seconds, you’d think it’s a human. They would have skin. They would breathe. They roll their eyes and all of that.

I worked on that in Japan, and then, I had some journeyman years where I traveled to different countries and worked with different professors. I eventually landed at the Georgia Institute of Technology, Georgia Tech in Atlanta. I worked there from there, I moved on here to Arizona State University. I have great collaborators with many different companies, for example, Radius AI, Facebook, Google X, and so on. It’s more of a team effort than a single person. I hope this gave you a short idea of how life develops and how things are sometimes not planned but turned out nice.

I introduced you as Heni Ben Amor, but you go by Ben. I know it’s Dr. Amor. What do you like to be called? I want to make sure I get the right one.

it’s Heni. Ben means son of and my grandfather’s name is Amor.

It’s interesting to look at some of your work. I was fascinated by the presentation you gave at Radius AI. Years ago, they had an event here in Arizona. They brought the Sophia robot here. She was fascinating to watch. I imagine things have changed considerably since I’ve seen her. She was pretty realistic from a distance. How much more advanced have they got since then?

[bctt tweet=”Everything that is easy for humans to do is ridiculously hard for robots to execute.” username=””]The robot that we worked on in Japan is substantially more realistic than Sophia. I can even send you a video clip of the robot, especially if you look at the close-up of the robot rolling the eyes and moving its head. It would take you probably a couple of seconds to figure out that it’s a robot and not a human if you’re not already warned about that. Ultimately, if I want to be honest, it’s not the physical appearance that turns out to be important for our perception of robots. It’s often the movement and the behavior. In robotics, we have this concept of an uncanny valley. I’m not sure if you’ve heard about it before?

The underlying idea is that as the visual appearance of a robot improves, our perception of its capabilities and our acceptance of the robot improves. You have a small robot that doesn’t do much, we think that’s great but it’s more like a toy. As you’re seeing robots like Wally, for example, in the Disney movie, you think, “That’s an impressive robot.” There’s an inflection point where all of this turns negative. If the appearance of the robot is lifelike, the visual physical appearance, but the robot is not moving right, then this can create some feeling of creepiness.

It’s like Schwarzenegger when they had those dolls that did the creepy thing.

There’s some uneasy feeling that this creates. There are some studies on that. There are psychological reasons for that. We see the robot as some undead or a walking dead human being. It’s close to being human, but at the same time, there’s something missing. That’s why it’s critical access or dimensions that we need to improve is for robots to behave in a human-like fashion, or at least an acceptable fashion. It doesn’t have to be human-like but more in a fashion that’s socially accepted by humans. That’s called the uncanny valley.

The reason for that is if you look at it as a graph, it’s a diagonal graph. On the X-axis, you would have the abilities of the robot. On the Y-axis, we would have the human acceptance of the robot. It would go on a diagonal for quite a while, but then there is this inflection point which falls down. The acceptance of the robot falls into a valley because we think something is off here. Once we get out of the valley, you go up again, and our acceptance of the robots improves again. What that means is, there are these robots in this uncanny valley where there’s a mismatch between the visual appearance and the capabilities of the robot. As a result of that, our acceptance of the robot is relatively low. We think it’s some undead. That’s the uncanny valley.

This is getting to be more like a Westworld-looking robot. Do you like to watch these shows or do you watch them and go, “Come on?”

I’m a great fan of these shows. There’s a lot of inspiration also from Star Wars in my work and a lot of pop culture references. These movies help us think about questions before they truly arise in manufacturing, social robotics, or something like that. We can think ahead of time. What can go wrong? What are the ramifications of our actions as scientists? At the same time, they inspire us. Scenes, for example, from the movie Iron Man have been a great inspiration for me and my work. There are entire papers and research projects that I’ve worked on that are copies of certain scenes from the movie.

What parts of the movie? What kinds of things should I be looking for if I watch Iron Man?

In the first Iron Man, you would see Tony Stark create or assemble his Iron Man suit by himself. It’s more in collaborative effort together with the robot. He has this robot assistant that would come in and hold an object at the right time. There is also virtual reality there. Iron Man can see the designs, the 3D models in some hologram in front of him. These kinds of technologies bringing together this mixed reality, augmented reality, virtual reality together with robotics. The robot helper is something that I’ve been working on.

We would have a robot helper that helps you assemble an IKEA shelf. Think of it as a helper projecting into the environment using a projector. It would project, “Pick up this object. Now, you have to attach it to this object.” While you’re doing that, it will bring over the right screws and hand them over. Once you’re finished, it would stabilize and hold some of the components so that you can then use the screwdriver and so on. Having humans and robots collaborate in this synergistic session was an eye-opener in the movie. We would like to reproduce.

We see Roombas and things that go around the house, clean, and do different things. Why aren’t we seeing more robots delivering you a cup of coffee or handing you things? How far off are we from that?

Robotics Industry: Movies help a lot in manufacturing robots, giving ideas and inspirations to scientists on what they can do.

We are quite a while off, I think. It turns out that everything we think is hard for robots turns out to be easy for robots. All the things that come easily to us as humans are ridiculously hard for robots, even things like picking up an object. For us, you don’t think about that anymore. As a human being, you just do it. For robots, I have to plan how to touch the object. What is the object? Identify the structure of the object, then figure out where to place the fingers. How much force to apply? If I move the hand, does the object slip out of the hand?

All of these are complex mathematical and physical computations that we have to perform. It turns out that they often involve complex mathematical concepts and uncertainty. If the robot sees an object, it’s quite uncertain about the shape because maybe illumination has changed how the object looks like in the camera image. These are complex things. Our home environments are among the most difficult environments for robots to work in.

A robot can work nicely in what we call a structured environment like in a warehouse and manufacturing plants where everything is flat, where there is a conveyor belt that brings the object to the robot. The robot doesn’t have to go to the object. There are no heaps. Whereas if you go into my kitchen, it doesn’t look like that at all. You will have heaps of stuff left and right, you need to step over things in order to get to the right location. When you’re looking for an object, it’s not where it’s supposed to be.

These unstructured environments are complex for robots to navigate in. In one of my papers, we had a presentation at the time and I start by saying, “We had a picture of my kitchen, and it was completely messy.” I then said, “What I’d like to have is a robot that can turn this messy kitchen into a clean, organized, and structured kitchen.” That’s something that will be good, but we’re still working on it. We still have quite a number of years away from that. Human environments, our homes, in particular, are tricky for robots to navigate and do tasks.

It’s interesting to look at some of the technology. I got a Quest for Christmas, and I was blown away by how much the virtual reality has changed. I haven’t had one of any kind in years. You get in and you actually feel like you’re somewhere else. It’s amazing. When you were saying doing something in the kitchen, it almost seems like you could sit in one room and use that to pick up things in another room. It’s going to be interesting to see how it all changes. Are we going to see dramatic improvements for a while or is it as you say, this is many years off?

Personally, I’m a little bit more on the pessimistic side. It takes a couple of years because there are some fundamental challenges that we have not resolved yet, especially when it comes to robots being accepted in our homes. There are many fundamental challenges there. Where we will see robots more are in attraction parks like Disneyland or something like that where the actual users are in a certain space. The people at Disney could basically say, “Over here you can interact with this robot, and we’ll help you do this certain thing.” They can also structure the environment in a certain way to facilitate that.

They can make It’s a Small World a lot less boring too.

Another example would be in the Disney theme parks, having humanoid robots as some stuntman. Having these stunt performances day after day and multiple shows, it’s quite dangerous for them. What Disney is thinking about is using humanoid robots that will do these things. Think about Spider-Man jumping through the air and doing all sorts of flips in the air, and then falling back onto the ground. For a stunt performer, it could be dangerous. If you have a robot, it can do that again and again even if something were to happen, you can easily repair the robot and then go on with it.

That’s where we’re going to see the adoption of robotics in these attraction parks and so on. Also, in healthcare environments under the supervision of a nurse or a doctor, some robot exoskeleton helps a person to rehabilitate. It’s rehabilitation robotics. We have that already. Robots that perform surgeries or assist in surgery. These kinds of tasks where there’s still some human supervision in a meaningful way, that’s where we’re going to see early adoption of these robotics technologies.

There’s also the adoption of autonomous driving. Even there, we’ve seen some challenges. Some of the driving has a long history and people may not be aware of that. In the late ‘80s in Europe, there were large projects conducted by the European Union where a car was autonomously driving from Munich to Copenhagen, which is around 1,000 miles. That was already in the late ‘80s. That car was autonomously driving, it would overtake other cars, follow the rules, and so on. We have that technology already.

This technology, in a way, resurfaced in the 2000s. One of the reasons for that was the DARPA Urban Challenge, in which different performers from different universities effectively created their own autonomous cars and performed in this challenge. After that, this attracted a lot of attention from companies like Google. As a result of that, there are now some investments in this domain. Again, some fundamental challenges on how to ensure the safety of people inside the car are not resolved.

[bctt tweet=”Unless it is programmed to do so, robots will have a hard time making split-second decisions according to common sense.” username=””]What about companies like Cruise that are doing that, are they pretty close to that?

I think they all deal with the same fundamental, theoretical, and ethical challenges. It’s going to take a while. All of these companies are pretty close to 99% accuracy in driving. The question is, what happens when there is something unexpected that occurs? As humans, we are good at performing commonsense reasoning and figuring it out. If something happened, in split seconds, you make a decision regarding that given all of the common sense and apply the knowledge that you have.

For robots, unless it’s programmed or this scenario has been seen before, it’s tricky to figure out what should I do in this scenario. That’s one of the things, dealing with uncertain and new environments. Let’s say, one of these car companies trains and builds their autonomous cars for the American market, and now, you ship it to Germany. In Germany, you’ll have a lot of snowfall. Your windshield will be full of snow. The robot only sees the white screen. What should the robot do in this scenario?

We would need someone to specify that. Think about similar scenarios. You shift the autonomous car to West Africa. You have different environmental conditions. In Africa, it sees an animal that it has never seen before stepping onto the street. Would it recognize that animal? Is the code for that already built-in? Would it somehow have to learn that or perform some common sense reasoning? As humans, we can easily identify. “This new thing that I see that has four legs and it kind of looks like a horse but it’s striped. Maybe it’s something like a horse so let’s try to avoid it. It’s an animal.” These kinds of reasoning steps from four legs to an animal to something like a horse and so on are tricky for these autonomous cars.

What does it do at this point if it runs into something it doesn’t know or hasn’t been exposed to? Does it shut down? Does it pick some option that makes no sense?

To be honest, that is left to the manufacturer. It’s not even clear if the car would even recognize that something novel has occurred that there’s this abnormal situation going on. Even that, identifying that something is off right now is a skill that we, as humans, are good at, but these autonomous cars would still need to be endowed with this ability to figure out something’s off here. There’s that gut feeling. It depends on the manufacturer. The manufacturer could have something that says, “If anything is off, then stop the car,” which also is not a good idea if you’re on the highway. It’s a catastrophic situation. That’s why I’m saying there are fundamental challenges we still have to deal with there.

I remember the CSI: Cyber and all that. They had people hacking into cars. How safe is it now even with the systems we have for people to hack into our cars while we’re driving? Is that even an issue or is that Hollywood?

I think it’s an issue. The good news is that there’s a lot of investment and effort going into making cars safe. If you think about it, even our old cars were not necessarily perfectly safe. Perfect safety is a theoretical concept. Potentially, there is always a way of somehow hacking a car and making modifications to it. Car manufacturers are doing a good job of securing a car so that the hacking becomes tricky, but ultimately, it’s possible. That is certainly a risk.

Also, one of the risks is, can we do hacking on a large scale? With a traditional car, someone could hack it or install some device that allows the person to do some mischief from afar but it would still be only on that single car. Google has a company called Waymo, where they do the autonomous taxi service. Imagine someone hacks into Google and is now able to control 1,000 taxi cars at once. It’s a risk. There could be risks for terrorism or large-scale attacks.

For security reasons on some of this stuff, does blockchain fall into any of this to secure it?

I don’t think that’s what’s happening right now. It’s mostly using the right kind of encryption to do that encrypted messaging. Blockchain is one of the avenues where this is going. There are a number of papers that explore using blockchain to secure the communication between a server and a client. The server is the computer that’s controlling a car or sending any critical information to the car. That’s certainly something that’s under consideration but it’s not yet the standard.

Robotics Industry: Robots work perfectly and nicely only in a structured environment. Human environments like houses will be hard for them to navigate.

It’s interesting to see what’s happened in computers. I graduated from ASU. In probably ‘84, ‘85, I took CIS 100, where they just built those buildings. It was brand new, it was exciting. They could teach you a computer class. How much has ASU changed their innovation comparative to then? They’re one of the top schools now doing these amazing things. What do you think is one of the coolest things they’re working on there?

I’m impressed by ASU. I say that as someone that is originally from a completely different country and I honestly didn’t hear about ASU before that. Once I arrived in the US, I heard a lot about ASU. That’s what made me also apply here. Among the different things that ASU is doing is doubling down on robotics and things like blockchain and virtual reality. What they did is they hired top faculty from all over the world.

I was also part of that batch of faculty being hired for robotics. We have here people working robotics in the medical field, robots for rehabilitation. I work on humanoid robots. Robots that potentially could be in your home of the future. There are these different facets, and for each one of the facets of robotics, you would find here a faculty that’s working on that and has potentially multimillion-dollar lab running projects for all sorts of agencies for science and so on. It’s impressive to see.

In my own lab, among the things that we’re working on together with NASA JPL, we have been working on a robot arm that would be mounted on a satellite. Now, satellites are not just passive devices anymore that are floating in space. They can actively pick up objects, maybe even perform assembly in space. One of the ideas there would be instead of shipping a big satellite or a big piece of equipment into space, which can be expensive, we could ship more pieces into space. Have these kinds of arm augmented satellites put the pieces together and build something in space.

Another variant of this that we’ve been discussing is the development or the assembly of robots in space. The way it works now is you would have to think about the robot that you want to ship into space. Typically, that’s a project that goes on for years and years. They assemble the robots and then ship them to Mars. If something is off, you’re stuck with the robot that’s at Mars and somehow need to figure out how to make a small adjustment to it using code but you cannot make a large adjustment.

What we have been proposing is shipping a 3D printer and a small robot arm into space that’s able to pick up the pieces from the 3D printer and assemble them together. Now that you have this ability, you can send up the description or the specification of a new robot and have it assembled in space. We can play around with many different shapes and different behaviors for the robot. We don’t have to think of it ahead of time. We ship the equipment to build the robot on Mars.

As you’re talking about 3D printers, years ago, I attended a Forbes Summit where a woman was wearing a 3D printed dress. It was pretty cool. I was expecting to see more with 3D printers. I teach at a tech school here in town. I teach at a bunch of different universities and one of them is a technical university. They were talking about 3D printers. Are you seeing a lot of use of those in individual homes or is this still something that’s not hit full market?

I don’t think it has hit full market yet. It has made a substantial impact in the manufacturing world. Not necessarily homes but that’s mostly for hobbyists and for people who are interested in this. In manufacturing, it already made a substantial impact. There are all sorts of different 3D printers. Some of them use metal, some of them use other materials. That allows you to prototype rapidly. In robotics, we use this kind of technology in order to quickly go from an idea for a robot to the actual assembled robot.

In our case, we had one of the projects here. This is not necessarily traditional 3D printing, but pretty close to it, where we assembled robots out of cardboard. The underlying idea is, if you have an idea for robots using our tools, you can quickly design the robot. By the way, this is a great collaboration with Professor Dan Aukes from the ASU Polytechnic School. You would think of a robot and using our tools, you can design it.

The specification is then sent to a 3D printer. A laser cutter in our case that cuts off the form for this robot on a piece of cardboard. Typically, within an hour, you can put together this piece of cardboard and place a motor inside, together with what’s called a Raspberry Pi, a small computer. They put these pieces in there, the motors and the computer, and you tell the robot, “Go.” It then will be able to do whatever you want.

We also develop machine learning techniques that allow the robot then to learn how to perform skills. We built with this turtle robot that was able to learn autonomously how to crawl over sand. The idea there was to create robots that detect landmines. That’s a big topic where, hopefully, we can make an impact also on the lives of people. Right now, there are landmine detecting robots, but they are expensive and large. If something goes wrong, they blow up, and then all the money and the investments are gone.

[bctt tweet=”Coming up with how robots can be used in homes is a better endeavor than trying to create them to do everything.” username=””]Can’t you just put a Roomba that is cheap out there? Have them blew up, who cares, because they’re cheap.

That’s a great idea, but Roomba’s don’t work well if the surface is not flat. They will not be able to navigate over sand and any of these tricky environments. The idea is the same. You are right. We created some type of turtle version of a Roomba that’s also low cost. Even if it blows up, the total investment for the robot is $40. What that allows us to do in the future is to throw a lot of these low-cost robots into an environment where they are these landmines. They would create a map of where the landmines are so that people could then go in and defuse these landmines or carve off this area and say, “You should not trespass into this area.” That’s the goal. Again, the idea would be to use these rapid prototyping and low-cost prototyping techniques to quickly go from an idea for a robot to the actual implementation.

As we’re talking about this, I’m thinking about the class that I teach. The students all have come up with ideas for companies that they want to start. Whether they want to create robots, record labels, or whatever it is they want to create. A lot of them like computer type based products. If you were talking to a student who was thinking of getting into developing a new product that’s robotic based, what do you think the next thing is? What would you suggest is an area for them to research?

The good news is that this area is ripe for discovery. There’s a lot of different things that they can investigate in this area. Coming up with a good idea of how to use robots in our homes would be interesting. The way to go about that is to instead of trying to create robots that can do everything, let’s try to create a robot that can do one thing well. That’s often my focus. Narrowing the scope down. At ASU, we also have services that help students come up with new ideas. Some of our robotics students come up with ideas that don’t appear robotic, but ultimately, under the hood, their robotics.

One of our students developed this device that can help with depression. It’s some earpiece that you put into your ear and it stimulates the vagus nerve. There are many studies that show that nerve plays a role in depression and the general feeling of anxiety. Our students used and leveraged the techniques from robotics and machine learning in order to stimulate this nerve. Think of it as some physical therapy, like a robot for physical therapy.

Thinking about the vagus nerve, I was a pharmaceutical rep for a long time. It’s interesting to me to think about that. It’s more like an earbud in your ear or over the top of the back of your ear. It probably would be around, right?

They’re prototyping it. I don’t know the exact shape of it. They won several awards for this already. That’s an amazing idea. Again, the underlying principles and techniques that you would need for this are exactly the techniques that you would learn in our department here at ASU.

I wish you were there when I was there.

Try to visit us.

I want to.

Please do that and I can show you all the robots that we have here. Among the things I can show you is my teddy bear robot. In my lab, we work closely together with Honda. It’s a funded project from Honda Research Institute where we build a robot that hugs people. It learns to hug people.

Robotics Industry: 3D printers allow developers to quickly go from an idea for a robot to the actual assembled robot.

How do you keep it from hugging to tight? I think of my chair. I have a massage chair that hugs your calves. It squeezes on to you. Sometimes it hurts. How do you make sure the robot doesn’t hug you too tight?

Some of your insights will be needed there. We should collaborate. The way we do it is by using tactile sensors. Under the skin, the robot also has sensors that can measure the pressure. It can also measure how tightly it’s squeezing the person. Another thing that the robot is doing is it’s learning from human demonstrations. It observes how people are hugging each other, how tight the hug is performed.

From that, it learns. On one hand, how tightly it needs to hug, but also when it needs to release which turns out to be the critical issue here. If a robot holds on to a person for too long, that’s creepy. The tricky thing is to figure out algorithms to do this spatial and temporal reasoning. Reasoning in time, when do I need to do the right action and release the person? Doing the right thing at the wrong time is problematic for robots. What we’ve been working on in my lab are robots that also know when to step in and when to step out and not engage.

That’s so out of the Temple Grandin movie. I don’t know if you ever saw that movie where she created the thing to hug her because she wanted someone to calm her down. That’s interesting to see all this. I know you’re doing some work, or at least, I met you through Susan Sly and Jeff Cox at Radius AI. Do you do work with Radius AI in general or is it some consulting? I’m curious what your connection is.

The connection is manifold. The co-CEO of Radius AI is a former faculty here at ASU. Aykut Dengi and he’s CEO. He was a faculty. I’m sitting next to his office. My office is immediately next to his office. He’s a dear friend of mine and we’ve been collaborating for quite a while, while he was at ASU. He then moved on to get this position as co-CEO for Radius AI. I was impressed by that. Of course, we still have the lunches and dinners together. We developed these ideas of how we can leverage some of the new AI and machine learning techniques to improve the health of people.

As a result of that, I got engaged as a consultant with Radius AI. We’re still collaborating together. They’re also hiring my students, which is great. It’s a great collaboration. Under the hood, what we’re trying to achieve is to be able to track or understand the environment, some situational awareness from camera feeds. Normally, if you have a CCTV camera or some camera that you have in a hospital, it doesn’t give you information about any potential risks that are there.

If someone falls down, it wouldn’t notify you or notify the nurse, “Someone fell down.” That’s one of the things that we’d like to achieve. We’d have these intelligent cameras that can notify people if there is a risk. Similarly, also, especially during the COVID crisis, we’ve been working with Mayo Clinic on improving the safety of the entire hospital staff but also the patients by doing a pre-screening or an early screening of people that could potentially have COVID.

We measure the temperature of a person. We track the movement of the person making sure that they abide by the social distancing norms. We can effectively measure how much the patients have followed the social distancing norm and give a final number at the end of the week, the month, or the day. The doctor will say, “You want to restructure the room because it seems like they’re not following the social distancing norms.”

By restructuring the room and giving additional information to the patients, we could improve this behavior. In a way, giving some notification back to the patient. At the same time, using these screening techniques, we can quickly identify if someone has a temperature that’s above the typical 100 degrees Fahrenheit, and then the nurse is notified. She can quickly intersect that person and say, “We do an additional test for COVID,” and so on.

It’s fascinating what they’re doing. They were using kiosk devices. Are those considered robotics? What would you call that? Would that be AI and a machine or is that robotics to you?

My definition of a robot is any machine that can physically change the world. If it can move an object or if it can play something. A prosthetic leg would be a robot. These devices don’t necessarily fall in the category of a robot, but they use the same technique. Machine learning computer vision, all the controls, and filtering techniques from robotics to achieve a similar thing. However, in the case of these kiosks, the actual physical action is still left to a nurse because we want to be safe.

[bctt tweet=”A robot is any machine that can physically change the world.” username=””]We could also have a robot maybe go to a person and say, “Would you please follow me.” That could be a potential avenue to investigate in the future. We’ve been thinking about that. For now, we want to keep the safety and engage the hospital staff that is trained for these scenarios so that they can interact with the person. At the same time, anything that can be done by our kiosk like asking a person if they have an appointment, or for the last fourteen days, they’ve been to another country, and so on. These are the typical questions that you get from the nursing staff. Of course, it takes time from them which they could devote to helping someone. These simple questions, screenings, the middle of things, we can push that on our kiosk. From my point of view, even though it’s not necessarily a robot, it’s using the same techniques.

It’s fascinating to see where everything is going. I’ve interviewed Jürgen Schmidhuber and different people who’ve dealt with AI. He was saying robots eventually will go to different worlds, they’ll be the future and we’re going to be gone. Do you think that’ll be the next generation? Humans are no longer needed someday, it’s all gone, other planets, and different things. The world is going to end. If you’ve read about astrophysics, the sun is going to implode or whatever. I am curious, do you see something happening where the robots turn to be the next thing and we’re not?

I don’t subscribe to this idea. Absolutely, not. Honestly, as someone who’s in the field, all of that is exaggerated. Ultimately, what the robot is doing is following relatively specific rules and statistical measures. Robots will not get any thoughts of sentient behavior anytime soon. As I mentioned earlier, they are having trouble picking up an object. If you give your coke cans to a robot, it’s going to struggle with that for the next 30 minutes. I’m not convinced that they will take over the world anytime soon.

How do you know they’ll follow them? If it’s a learning machine, can they learn how to not follow the rules eventually?

One thing I’d like to say about machine learning, especially for robots, is still in its infancy. Despite everything that we see on the news, it’s still in its infancy. Robots are not able to learn even simple things. We’re working on that so that may change in the future but it’s not the case now. At the same time, we’re also working on techniques in order to verify the behavior of robots. Being able to specify in a logical formal way, what the robot can do and what the robot cannot do.

For example, we have a collaboration with my colleague Georgios Fainekos, where we investigate how to specify these boundaries for robots formally. One thing I need to say is, this is not limited to robots. One of the tasks we’re applying this to is an artificial pancreas for people with diabetes. You can have this artificial pancreas where you want to make sure that it never releases insulin above a certain threshold or it never does an action that can be harmful to a person. Having ways of verifying that the actions of a robot or device are within our specification is something that’s important that we’re working on. It’s real for an artificial pancreas or a robot that hugs you. A robot that’s hugging should not squeeze too tight, or an artificial pancreas should not be releasing too much insulin, and so on. It’s the same principle applied to different scenarios.

It is interesting watching the Grey’s Anatomy, things of the world of the 3D printed organs, and things that they talk about. There’s so much advancement. I think about how many times they talked about Moore’s law and everything doubling in tech, improving, and all these things that have changed. Why aren’t they making us travel any faster? What happened to Elon’s tubes that we’re going to shoot us through and all that? What happened to travel? There are no transporter devices. You talk about things we get from the movies and TV. We could go to Mars or things like that are improving, but not going to Europe. How come?

To be honest, that’s out of my field of expertise. One thing I like to say about technology, in general, is it creeps up on us. Eventually, it’s there. If you try to follow it, you’re trying to prognosticate how it’s going to behave or how technology is evolving. Most of the time, you’re probably going to be wrong about it. if you think of our cell phones, all the different things that our cell phones can do goes back to a lot of developments in the field of AI, machine learning, and robotics.

If you think about your cell phone being able to automatically recognize what’s written on a piece of paper until you can archive everything that you have written down. If you go to one of these supermarkets, you can point yourself on some items and it will automatically recognize them and even make recommendations, “You can get the same item somewhere else.” Under the hood, that’s also machine learning computer vision and the same techniques, but you don’t realize it anymore. It has become this invisible thing.

That’s often true about technology. Oftentimes, it’s there and you don’t realize it anymore. In a couple of years, we can travel substantially faster but we haven’t realized that we can do that. That’s my view of technology. Generally, when it comes to technology, I’m an optimist. My view is, if you want it to be used for good, then be involved. Don’t leave it to other people. Be involved and give your opinion. If you have the opportunity, be a scientist.

Even if someone is not a scientist, they can reach out to their local university and be engaged in projects or their children can be engaged in projects. We have great collaborations with high school students. Honestly, many of the high school students here in the area are amazing scientists, way better than I am. This is not flattering them or anything. There are amazing students in the area. If there’s anything I’d like to do, it is to empower them to become the roboticist of the future.

Robotics Industry: If you want robots to be used for good, then be involved and give your opinion. Don’t just leave it to the experts to discover.

It is amazing to see what some of these ideas are. You were talking about some of the things we already invented. I was surprised. I used to always talk about QR codes years ago, and then you didn’t see much of them. Because of COVID, now everybody’s menus are all on QR. You don’t know when they’re going to come back in force because they need it for something else. What do you think is the next big thing to watch? Is there one big prediction of something that you can’t wait for?

I’m a big fan of robots that can also explore the world to some degree by themselves. I’ve seen some of your talks and you talk about curiosity. That curiosity is one of the driving, if not the key factor, for success in life. Being curious about new opportunities, new jobs, new books, and so on. We are organizing a workshop on robot curiosity. Would it help to have this ability in a robot on Mars to explore some new rocks or environments curiously? Is that always helpful?

We have the saying, curiosity killed the cat. Too much curiosity seems to be a bad thing. I’m not sure about that. That’s among other things that we’re investigating in our workshops that I’ll be organizing together with colleagues from MIT and the university. It’s going to happen at the end of May 2021 or the beginning of June 2021. We have a great lineup of speakers from all over the world that will be talking about curiosity. Whether curiosity in some algorithmic computer science form could help create the next generation of robots that are adapted to the environment and the needs of the human user.

What’s interesting to me about that is what I focus on are the things that inhibit curiosity in humans and robots wouldn’t have. Some of that is fear. The assumptions might be because you make false assumptions based on whatever input there is, which is one of the things that we do. That voice in your head, but for them, it would be inputted data maybe that’s not correct. Technology, with humans, is over and underutilization of it. Environment, for humans, is anybody you’ve ever come into contact with and things they’ve told you. Of course, with them, it would be anything that’s been programmed. That’s fascinating. We’ll have to talk more about that. What you’re working on is absolutely fascinating. I’m sure a lot of people want to reach you and find out more about what you’re doing. How can they find you?

Thank you so much. I’m a professor at ASU. The first step is to go on the website of ASU, www.ASU.edu. On the website of ASU, they can also find me if they look for Heni Ben Amor on robotics. At the same time, at ASU, we have other excellent faculty that are working on robotics, machine learning, and AI. I’m encouraging them to browse through the website of ASU. In the News section, we often report about the new developments from ASU in the field of robotics.

If they’re interested to reach out to me, my email is also HBenAmor@ASU.edu. We try to do these outreach activities because we have excellent potential handling in Arizona and in the area around ASU. What we’d like to do is to foster this idea of curiosity in the field of robotics. People being interested in it. Even if you don’t want to become a robotics engineer, but you’re interested in that, feel free to reach out to us. We do a lot of different events where we try to give an idea of what’s going on in robotics. For example, among the things that we organize is a panel at the Phoenix Comic-Con, where we’re talking about the relationship between comics and modern-day robotics. We’re interested in working with people here from the area. There’s certainly some way for us to engage going forward.

That’s awesome. This was so interesting. Thank you so much, Heni, for being on the show. I enjoyed it.

Thank you so much for this great opportunity.

You’re welcome.

—

I’d like to thank Heni for being my guest. We get so many great guests on the show. If you’ve missed any past episodes, you can go to DrDianeHamilton.com. If you go to the blog, you could read them and listen to them. Of course, we’re on the AM/FM stations listed on our sites and all the podcast stations but sometimes it’s nice to read them and tweet out tweetable moments. I hope you enjoyed this episode. I hope you join us for the next episode of Take the Lead Radio.

Important Links:

- Dr. Heni Ben Amor – LinkedIn

- Radius AI

- Cruise

- Raspberry Pi

- Jürgen Schmidhuber – Previous episode

- www.ASU.edu

- Heni Ben Amor

- HBenAmor@ASU.edu

About Heni Ben Amor

Heni Ben Amor is an Assistant Professor for robotics at Arizona State University. He is the director of the ASU Interactive Robotics Laboratory. Ben Amor received the NSF CAREER Award, the Fulton Outstanding Assistant Professor Award, as well as the Daimler-and-Benz Fellowship. Prior to joining ASU, he was a research scientist at Georgia Tech, a postdoctoral researcher at the Technical University Darmstadt (Germany), and a visiting research scientist in the Intelligent Robotics Lab at the University of Osaka (Japan). His primary research interests lie in the fields of artificial intelligence, machine learning, robotics, human-robot interaction and virtual reality. Ben Amor received a Ph.D. in computer science from the Technical University Freiberg, focusing on artificial intelligence and machine learning.

Heni Ben Amor is an Assistant Professor for robotics at Arizona State University. He is the director of the ASU Interactive Robotics Laboratory. Ben Amor received the NSF CAREER Award, the Fulton Outstanding Assistant Professor Award, as well as the Daimler-and-Benz Fellowship. Prior to joining ASU, he was a research scientist at Georgia Tech, a postdoctoral researcher at the Technical University Darmstadt (Germany), and a visiting research scientist in the Intelligent Robotics Lab at the University of Osaka (Japan). His primary research interests lie in the fields of artificial intelligence, machine learning, robotics, human-robot interaction and virtual reality. Ben Amor received a Ph.D. in computer science from the Technical University Freiberg, focusing on artificial intelligence and machine learning.

Love the show? Subscribe, rate, review, and share!

0 Comments